Project: CoShare: a Multi-Pointer Collaborative Screen Sharing Tool

Existing tools for screen sharing and remote control only allow a single user to interact with a system while others are watching. Collaborative editors and whiteboards allow multiple users to work simultaneously, but only offer a limited set of tools. With CoShare, we combine both concepts into a screen sharing tool that gives remote viewers a mouse pointer and a text cursor so that they can seamlessly collaborate within the same desktop environment. We have developed a proof-of-concept implementation that leverages Linux’ multi-pointer support so users can control applications in parallel. It also allows limited sharing of clipboard and dragging files from the remote viewer’s desktop into the video-streamed desktop. In focus groups we gathered user requirements regarding privacy, control, and communication. A qualitative lab study identified further areas for improvement and demonstrated CoShare’s utility.

Status: ongoing

Runtime: 2021 -

Participants: Martina Emmert, Andreas Schmid, Raphael Wimmer, Niels Henze

Keywords:

Introduction and Motivation

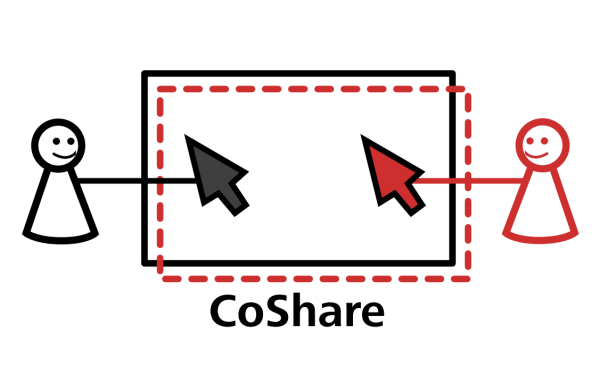

Remote collaboration has become ever more common in recent years. Most applications for synchronous remote collaboration can be grouped into two categories: screen sharing and collaborative editing. Most video conferencing software allow people to stream their screen’s contents to other participants in a meeting. However, there is always a clear distinction between presenter and viewers. Viewers may view, comment, or sometimes annotate shown content, but they cannot interact directly. In contrast, web-based collaborative editors, such as Google Docs or Miro, provide a document or canvas in which multiple people can edit content simultaneously with the same editing powers and tools. However, all collaboration happens on a remote server within a single document and using the provided set of tools. With CoShare, we explore a different type of remote collaboration: multi-pointer interactive screen sharing. The hosting user not only streams their screen to visitors but also allows them to interact with the screen contents as if it were their own computer. Each visitor controls their own pointer and text cursor. To learn about the technical, interactional, and social aspects of such an interaction model, we developed a proof-of-concept prototype for sharing (part of) a Linux desktop with a single remote visitor.

Design and Implementation

With CoShare, our goal is to explore how screen-sharing can be turned from a broadcasting medium to an interactive medium. Our interaction concept is inspired by physically co-located collaborative work where visitors are invited into the host’s home or office, given access to facilities and tools, and equally contribute to the task at hand. Using a design science approach, we iteratively developed a prototype of a collaborative screen-sharing application. First we gathered requirements and attitudes in two focus groups. Based on the requirement analysis, we developed a proof-of-concept prototype and evaluated its utility and limitations in a small user study.

Requirement Analysis

To learn about needs of users in remote collaborative work, we conducted two remote focus groups with four respectively six participants via Zoom. All participants were local media informatics students (21–26 years, 7m, 3f) and had experience with a variety of screen-sharing tools, for example for collaborating remotely with others to work on university projects. The selected group is representative of the typical audience we envision for CoShare. Both focus groups had the same structure. First, groups of two participants were asked to consolidate the advantages and disadvantages of existing screen-sharing tools and collaborative work solutions. After that, we presented initial concepts for an interactive screen-sharing application, such as sharing a partial region of the host’s screen and redirecting the visitor’s mouse and keyboard input to the host’s desktop. Participants then discussed the concepts and made suggestions for the features’ implementation. By consolidating the focus groups’ transcripts, we identified the following general topics (participant ID in parentheses): To preserve privacy, participants appreciated the opportunity to share arbitrary regions instead of a whole window or screen (6). The shared region should be highlighted at all times (3) and re-scaling should be possible (3). To interact with the shared content, mouse and keyboard input within the shared region should be propagated through the stream (5). Parallel interaction requires simultaneous and independent inputs (4). Visual clues should help to distinguish cursors (3). The application should include a direct way of data transfer between visitor and host, avoiding the need for a separate file sharing platform (4). The system should be as simple to use as possible, e.g., by leveraging and supporting established interaction techniques, such as copy/paste or drag/drop (5). Performance should be good enough not to distract users and latency should be minimized (5). Furthermore, participants suggested integrating these features into an existing cross-platform video conference software (5), an option for the host to restrict the others’ interaction for security reasons (5), and the option to open shared files with their own preferred tools (3).

Design Decisions and Implementation

Based on the requirement analysis, as well as on consensus from related work, we made several design decisions for CoShare. For the first design iteration, we focused on basic interaction concepts, data transfer, and privacy features. Therefore, we postponed cross platform support and security features, and only support a single visitor. In the current prototype, the host can select an arbitrary rectangular screen region to be streamed. Parts of application windows in the selected region are shared while everything else remains private. On the host’s desktop, the shared area is indicated by a red border. On the visitor’s desktop, the stream is displayed within an application window. This way, all collaborators can have their own private area. Independent cursors for each user allow for pointing, clicking, and typing simultaneously at different positions on the screen without interfering with each other’s input during parallel work. Each participant’s cursor is marked with a distinctly colored dot. Each participant’s keyboard is linked to their mouse pointer, so the window/widget focused by the mouse pointer receives keyboard input. This allows users to work in different applications on the host’s desktop in parallel. Applications that explicitly support multi-pointer interaction may also be used simultaneously by multiple participants at once. Clients can copy and paste text through the shared screen using the usual shortcuts CTRL+C and CTRL+V without affecting the host’s clipboard. Visitors can transmit files to the host by dragging a file on their own computer into the application window. Dragging files from the host’s desktop to the visitors is not possible with the current prototype. We developed CoShare for GNU/Linux as it is the only major operating system with native multipointer support and provides easy access to input events and clipboard. The application is written in Python 3.8 with PyQt5 as UI toolkit. A few components are written in C. Visitors can join the stream via a tray menu. The host then receives a registration request and starts streaming the shared screen region which is displayed in a window on the viewer’s system. All real-time communication between host (server) and visitor (client) happens via UDP. For transferring files, we use a Flask HTTP server running on the host’s system. For the video stream, we use gstreamer. We use the MPX to allow for parallel work with multiple mouse cursors and virtual keyboards. The visitor’s keyboard input is captured using evdev, and mouse events are retrieved via pynput. All captured input events within the visitor’s application window are sent to the host as evdev events. On the host’s side, received events are assigned to a virtual input device and input events are emitted using uinput. The keyboard shortcuts CTRL+C/V are not forwarded to the host. Instead, when copying from the stream window, the host’s clipboard content is transferred to the visitor’s local clipboard. If they paste into the stream window, the visitor’s clipboard is sent to the host and pasted at the position of the visitor’s cursor. To allow for file transfer via drag and drop, we capture drop events on the stream window with PyQt5 and send the dropped file to the HTTP server running on the host’s machine where a drop event is simulated.

Evaluation

We evaluated CoShare in a small formative user study. We recruited twelve participants (21–25 years, 9m / 3f) with and without a technical background to cover different perspectives on the system and different approaches to solving the task. We designed a scenario which represents a realistic use case for collaboration: a teacher (host) and a tutor (visitor) work together to grade assignments of a language course and record student’s grades in a summary sheet. The assignments, short texts about the USA and Germany, were stored on the tutor’s computer. The teacher had an assessment sheet with a list of requirements for the assignments, the summary sheet, and a language tool to help find grammar and spelling mistakes. For each group, both participants were seated at separate desks in our laboratory. On each desk, there was a PC running CoShare. The experimenter observed the study from a distance and took notes. First, we informed participants about the study’s procedure and asked them for demographic data with a questionnaire. Then, we introduced participants to the prototype and explained the task. Additionally, we provided a printed sheet with a task description and information on where to find required documents and tools on their computers. Each group graded eight texts in total, switching roles after the first half. As we intend CoShare to be used together with voice chat or video conference software, participants were allowed to talk to each other during the study, for example to discuss their strategy to solve the tasks. The study was followed by a semistructured interview to reflect participants’ usage of CoShare. We asked them for a comparison between the host’s and the visitor’s part, their opinion on provided features, if they missed any features, and which problems occurred or might have occurred using the system. We then asked them for advantages and disadvantages of CoShare compared to existing screen-sharing tools, and during which use cases they would benefit from an interactive screen share.

Results and Discussion

We transcribed and paraphrased all interviews and used inductive category formation to group results.

Streaming and Viewing: According to eight participants, sharing a selected region makes sense to preserve privacy and hide irrelevant areas. However, new windows might open within the shared region. Five participants found CoShare most useful when using a separate screen as shared space. Five participants wished to be able to re-scale the streamed region during runtime. Sometimes, compression artifacts degraded the stream and there was no indication for the viewer when the streamer stopped the stream.

Overall, Interacting with the Stream was appreciated by the participants. Four participants noted that most applications do not support simultaneous input. Two participants found the mouse cursor indicator not clear enough. Text and Data Transfer: Due to a bug with the shared clipboard, CoShare occasionally pasted the streamer’s clipboard content instead of the viewer’s when they pressed CTRL+V. For four participants, the clipboard worked flawlessly, and two participants did not use it. Four participants found the drag and drop feature simple and useful. Two participants had safety concerns, as the streamer can not opt out of receiving files at the current state of development.

Feature Requests: All participants found CoShare useful for collaborating, but agreed that some kind of voice chat is necessary when working remotely. Six participants requested the option for streamers to bind the stream to an application window, so it can be moved or minimized without affecting the stream. Four participants wished to use an independent system to initialize the stream, so all users are equal. Six participants requested bi-directional data transfer. Annotations on the streamer’s screen, as well as stream recording and rewinding were mentioned by one participant each.

Advantages vs. Screen Sharing: Seven participants saw CoShare’s biggest advantage in the simple data transfer. Seven participants appreciated increased efficiency as they could do things themselves instead of describing them to the streamer. Two participants found CoShare’s context independence to be an advantage over cloudbased tools.

Use Cases: Participants identified several use cases for CoShare: teaching (3), group projects (4), pair programming (2), collaborative drawing (1), helping/guiding someone else (1), checking on a colleague’s progress without disturbing them (1), and remote meetings with friends (1) or colleagues (1).

Overall, participants liked concept and implementation. Focus groups and study highlight important aspects that need to be considered when developing multi-pointer screen-sharing tools: privacy and security concerns need to be addressed. These mechanisms should be based on existing mental models. Making only a part of the screen accessible to visitors is a useful feature. Sharing clipboard contents and files is an important feature that needs to be fleshed out.

Conclusion and Future Work

We presented CoShare, a proof-of-concept implementation for a novel approach to computer-supported remote collaboration. We could show that interaction through a shared screen is possible with only a few low-level features such as input forwarding or data transfer. In contrast to traditional screen sharing, agency is distributed evenly between participants as all have simultaneous access to files and applications in the shared area. Furthermore, CoShare allows users to solve tasks with tools and workflows they are familiar with, opposed to restrictive applications targeted at specific tasks that come with current approaches to collaborative work. While most applications are not built with multi-pointer support in mind, CoShare allows for working in parallel on separate applications. For an ideal solution, true multi-pointer support would include features such as multiple cursors in a single text input widget. Solving this problem would require major modifications of existing software, for example by integrating multi-pointer support into established UI frameworks. Future development of CoShare will focus on multiplatform support, improved data transfer between participants, and integration of a video/voice channel. After implementing these features, we will be able to compare CoShare to established systems for remote collaborative work. Additionally, we plan to evaluate how to visually distinguish the different cursors effectively without distracting the users. Source code of CoShare is publicly available under an open source license.

Publications

Martina Emmert, Andreas Schmid, Raphael Wimmer, Niels Henze

Proceedings of the Mensch und Computer 2023

We developed a proof-of-concept implementation that leverages Linux' multi-pointer support so users can control applications in parallel. (Tweet this with link)

All Related Posts

Mensch und Computer 2023 (2023-09-06)

PDA was part of the MuC again, the annual German flagship conference on Human-Computer-Interaction and Usability. (more...)

Best Short Paper Award for CoShare at MuC '23 (2023-09-05)

CoShare: a Multi-Pointer Collaborative Screen Sharing Tool received the Best Short Paper award at MuC '23, the annual German flagship conference on Human-Computer-Interaction and Usability. (more...)