Project: Sketchable Interaction

Sketchable Interaction is a generic interaction concept that empowers end-users to compose custom user interfaces by drawing interactive regions that represent user interface components. By overlapping or linking such interactive regions, end-users can trigger effects which modify the graphical representations and data of these regions. The goal is to provide a system that better supports end-users in their current computing tasks and enables new methods of working with data.

Status: ongoing

Runtime: 2018 -

Participants: Jürgen Hahn, Raphael Wimmer

Keywords: Interaction Concept, End-User Empowerment, Post-WIMP GUIs, End-User Development

Goal

- create an extensible reference implementation of the Sketchable Interaction concept

- evaluate the reference implementation's validity and scalability

- evaluate an exstension of the concept which incorporates physical artefacts, outfitted with interactive regions, in an projected AR setup

- demonstrate areas of application which Sketchable Interaction improves and demonstrate areas where limitations arise

News / Blog

Visit by Minister-President Dr. Markus Söder (2022-05-05)

We presented current and upcoming research. (more...)

Sketchable Interaction: Core Interaction Concepts (2019-06-24)

Learn more about Sketchable Interaction's three core interaction concepts: sketching, collision and linking (more...)

Sketchable Interaction: Introduction and Terminology (2019-04-24)

Learn about the concept of Sketchable Interaction (SI) and its terminology. (more...)

PDA Group at CHI '2018 (2018-04-21)

We will present a poster and a workshop paper at CHI 2018. (more...)

Status

Concept

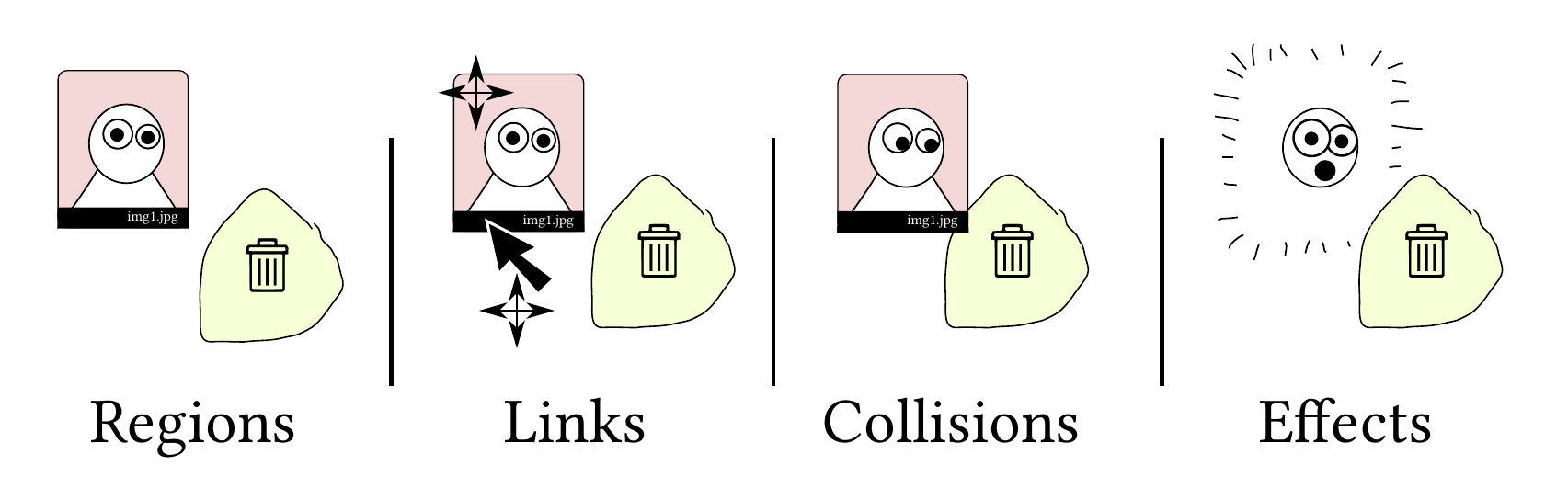

Sketchable Interaction consits of four core principles.

Everything within SI is represented as an interactive region.

Such regions carry effects which allow for end-users to perform actions within the user interface they composed with regions.

Effects are triggered once two regions touch or overlap.

This is called a collision of regions and may lead to a change in the graphical representation of the regions, its data, or both.

Alternatively, once two or more regions are linked to each other they change together once the specific linked attribute of a region is modified.

For example, the position of region A is linked to the position of region B.

Therefore, the same positional change of region A is also applied in region B.

Regions can be linked arbitrarily as long as their respective effects contain the necessary implementations of the desired behaviour.

In that way, it is possible that the position of region A may be linked to the color of region B instead.

Therefore, e.g. the shade of the color of region B gets brighter and darker dependent on the position of region A.

The videos below show SI in action and were created for specific presentations (LIVE2020 and CHI2021).

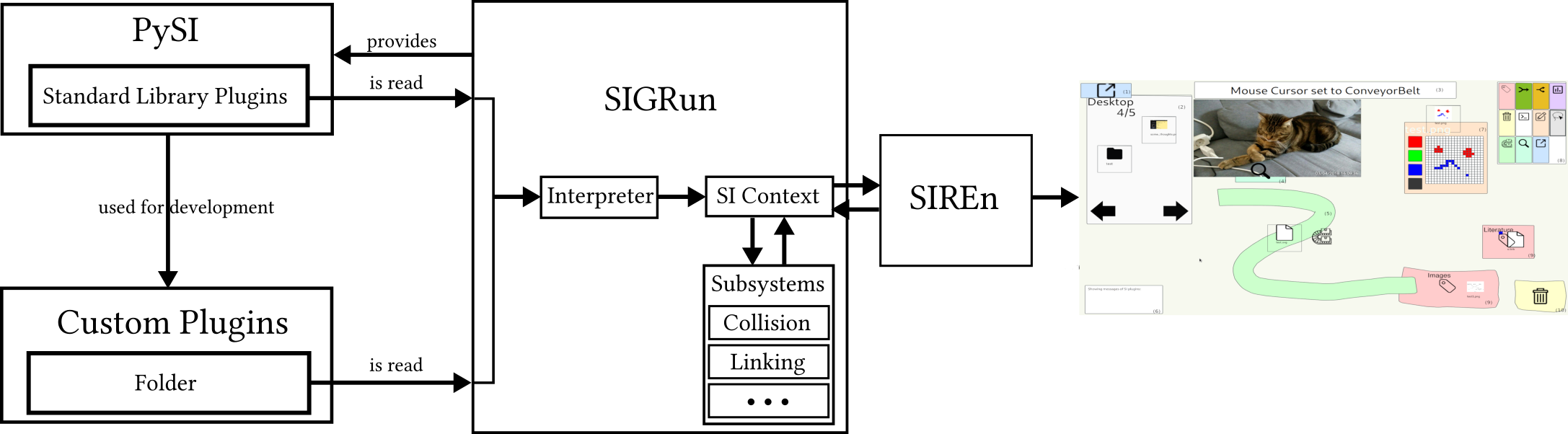

Reference Implementation of the Sketchable Interaction concept

The Sketchable Interaction concept is implemented as a runtime environment called the Sketchable Interaction General Runtime (SIGRun). Developers add functionality which is usable by end-users to SIGRun by adding Plugins. Therefore, SIGRun by itself provides no business logic which end-users can use to create user interfaces. It is highly dependent on the set of available plugins which determines the possibilities with which end-users can draw and customize their user interfaces. The image below provides an overview of the complete architecture of a Sketchable Interaction system with SIGRun at its core.

A Sketchable Interaction Rendering Engine (SIREn) can utilize the small API of SIGRun to visualize the state which SIGRun manages.

The source code of SIGRun (and SIRen) is publicly available and open-source via: https://github.com/PDA-UR/Sketchable-Interaction

Disclaimer: Bear in mind that the software linked above is a prototype and may be subject to change at any point and in a breaking way with no regard for backwards compatibility at all.

Plugins

Plugins are written in Python for logic and QML for visualization and styling.

Plugins represent a specific effect which end-users can draw as an interactive regions within an SI context.

Developers utilize the PySI API to build new Plugins and add QML for specific styling.

Newly created Plugins simply have to be put in the folder plugins of the directory containing the SI system application.

SIGRun will detect functional Plugins automatically and omits broken plugins, e.g. plugins which are syntactically incorrect.

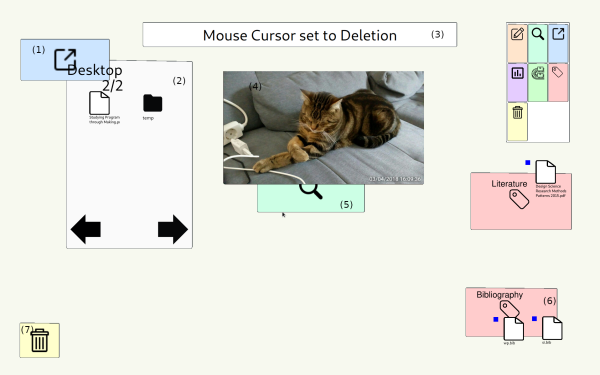

Every usable element of an Sketchable Interaction system is represented as a plugin containing a specific effect which is shown to end-users as an interactive region.

This includes the canvas on which end-users draw new regions on as well as folders, files, the mouse cursor, etc..

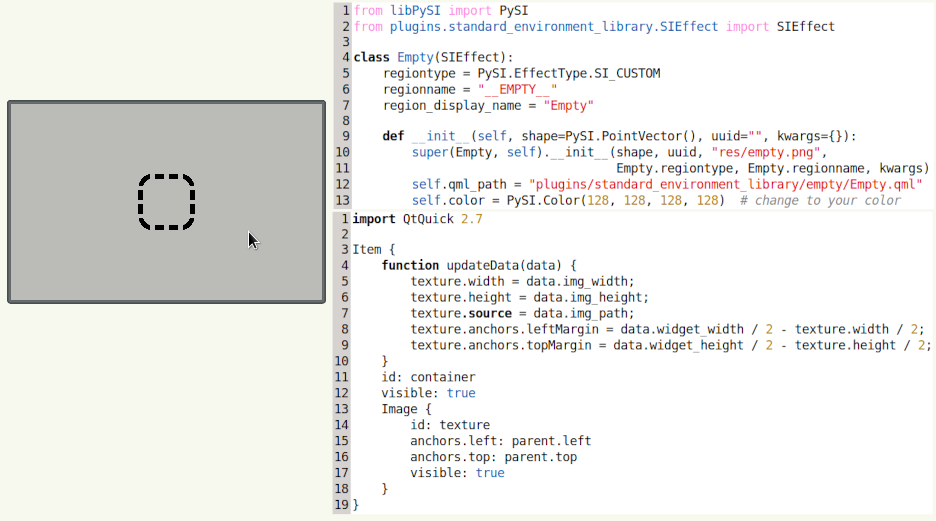

A minimal implementation of a Plugin which provides no functionality at all is called an empty Plugin.

The rendering output of an interactive regions with the effect empty is shown in the left of the image below.

To the right there are are two segments of code.

The upper code segment is the minimal Python code required to successfully create a Plugin which SIGRun accepts.

The lower code segment is additional QML code required to add the placeholder texture in the center of the gray interactive region.

The current collection of Plugins for the Sketchable Interaction reference implementation is publicly available and open-source via: https://github.com/PDA-UR/Sketchable-Interaction-Plugins

Disclaimer: Bear in mind that the software linked above is a prototype and may be subject to change at any point and in a breaking way with no regard for backwards compatibility at all.

Evaluation and Deployment

Evaluation of Utilizing interactive Regions to represent //Folders// Allowing for simple File Management Tasks

We implemented the Bubble Clusters concept[1] as Plugins for SIGRun. End-users can draw them as interactive regions. These regions are then usable as Folders and allow for performing simple file management tasks, according to the Sketchable Interaction concept and its four core principles.

We performed a user study with 30 participants (20 male, 10 female; 17 to 58 years old, M=30.03, SD=12.43) to evaluate bubble clusters in Sketchable Interaction for use as Folders and compare it to the status quo represented by the standard Windows Explorer. We identified three tasks for the user study, according to literature[2, 3, 4, 5, 6, 7, 8].

- Folder creation and sorting of items

- sorting function- Windows: create two new folders, one for each file type, and move present files into them based on their type

- Sketchable Interaction: create two interactive regions (bubble clusters), one for each file type and put the files in them accordingly

- Find a specific file in a folder

- Windows: four subfolders with image and text files of which all pictures of a bird have to be moved by drag-n-drop to a new folder- Sketchable Interaction: four interactive regions (bubble clusters) containing image and text files of which all pictures of a bird have to be moved to a newly made interactive region

- All files are first collected and then sorted for further use

- Windows: four subfolders of which all their respective elements have to be moved to one of those subfolders; after that either all image files or all text files have to be moved to another subfolder- Sketchable Interaction: four interactive regions (bubble clusters) containing files which are merged and split according to other Plugins which were implemented as part of the Bubble Clusters concept.

The results of the study indicate that SI and Windows Explorer do not differ significantly for this specific set of tasks. However, in absolute values, SI outperformed Windows Explorer across all tasks by ca. 15 seconds. The participant especially completed task 3 a little more than 2.5 times faster with SI (~26 seconds) than with Windows (~67 seconds).

We plan to replicate and reproduce this study with completely new participants and hope to re-evaluate these results.

References in this section:

[1] Watanabe, N., Washida, M., & Igarashi, T. (2007, October). Bubble clusters: an interface for manipulating spatial aggregation of graphical objects. In Proceedings of the 20th annual ACM symposium on User interface software and technology (pp. 173-182).

[2] Bergman, O., Whittaker, S., Sanderson, M., Nachmias, R., & Ramamoorthy, A. (2010, December). The effect of folder structure on personal file navigation. Journal of the American Society for Information Science and Technology, 61(12), 2426–2441. (Bergman2010) doi: 10.1002/asi.21415

[3] Bergman, O. (2013, September). Variables for personal information management research. Aslib Proceedings, 65(5), 464–483. (Bergman2013) doi: 10.1108/AP-04 -2013-0032

[4] Boardman, R., & Sasse, M. A. (2004). ”Stuff goes into the computer and doesn’t come out”: a cross-tool study of personal information management. In Proceed- ings of the 2004 conference on Human factors in computing systems - CHI ’04 (pp. 583– 590). Vienna, Austria: ACM Press. (Boardman2004) doi: 10.1145/985692.985766

[5] Barreau, D. K. (1995). Context as a factor in personal information management sys- tems. Journal of the American Society for Information Science, 46(5), 327–339. (Bar- reau1995)

[6] Whitham, R., & Cruickshank, L. (2017, January). The Function and Future of the Folder. Interacting with Computers, iwc;iww042v1. (Whitham2017) doi: 10.1093/ iwc/iww042

[7] Oh, K. E. (2019, May). Personal information organization in everyday life: modeling the process. Journal of Documentation, 75(3), 667–691. (Oh2019) doi: 10.1108/ JD-05-2018-0080

[8] Jones, W. P., Jones, W., & Teevan, J. (2007). Personal Information Management. Uni- versity of Washington Press. (Google-Books-ID: byN4SPUt6RgC)

Deployment in the Field

From DateX to DateY, the Sketchable Interaction concept was successfully deployed in a real-life scenario in a Pop-Up Store part of a local gastronmomy business. For that deployment, SIGRun was extended to being able to receive external data which represents physical artifacts on a table ([TUIO2][1]). In this way, SIGRun is able to dynamically outfit arbitrary physical objects with interactive regions. The previously strict digital SI-context is now upgraded to represent physical-digital environments seemlessly. By combining the software of the VIGITIA project (tracking of phyiscal objects on a table and the table itself) with SIGRun (visualization, interaction).

References of this section:

[1] Martin Kaltenbrunner and Florian Echtler. 2018. The TUIO 2.0 Protocol: An Abstraction Framework for Tangible Interactive Surfaces. Proc. ACM Hum.-Comput. Interact. 2, EICS, Article 8 (June 2018), 35 pages. DOI:https://doi.org/10.1145/3229090

Further Evaluations

- see Current Work section below

Current Work

Based on the reference implementation, evaluation projects with regard to the SI concept have been identified and are being worked on.

These projects are:

- Evaluation of Validity and Scalability

- Emailing Tasks

- Interview and Questionnaire for Requirements Engineering

- Build the required plugins to perform a quantitative evaluation

- Evaluate absolute and relative user performance

- Evaluation of Extensibility

- Collaborative Design Tasks (e.g. scientific poster)

- Extend SI to incorporate physical artifacts in a projected AR setup

- Evaluate the collaborative task in a physical-digital context

Publications

Workshop *Rethinking Interaction* in conjunction with ACM CHI 2018

Users can define custom workflows by drawing regions on the desktop that determine how objects within these regions - such as digital documents or windows - behave. (Tweet this with link)

Mensch und Computer 2019 (Poster)

First version of a extensible study design for a generic image-sorting task (Tweet this with link)

Conference on Human Factors in Computing Systems 2021 Extended Abstracts (CHI ’21 Extended Abstracts)

Composing functional GUIs by drawing interactive regions. (Tweet this with link)